Whether you love it, loathe it, or just have no real feelings on the matter, OpenAI's hungry-hippo-for-dollars ChatGPT isn't going to disappear any time soon. Neither will the need for it to be powered by Nvidia's mega-GPUs. However, that doesn't mean its creators aren't looking to cut costs somewhere, and one report claims a potential solution to this could be to use its own AI chips.

That's essentially what's being suggested in a report by The Financial Times (via Reuters), which claims that OpenAI has signed a deal with US semiconductor firm Broadcom to design and manufacture its own machine learning processors, for internal use next year.

This is backed up by a statement from Broadcom's CEO, Hock Tan, who said that the company had secured $10 billion in AI system orders from a 'new customer', which should result in significant revenue growth in 2026.

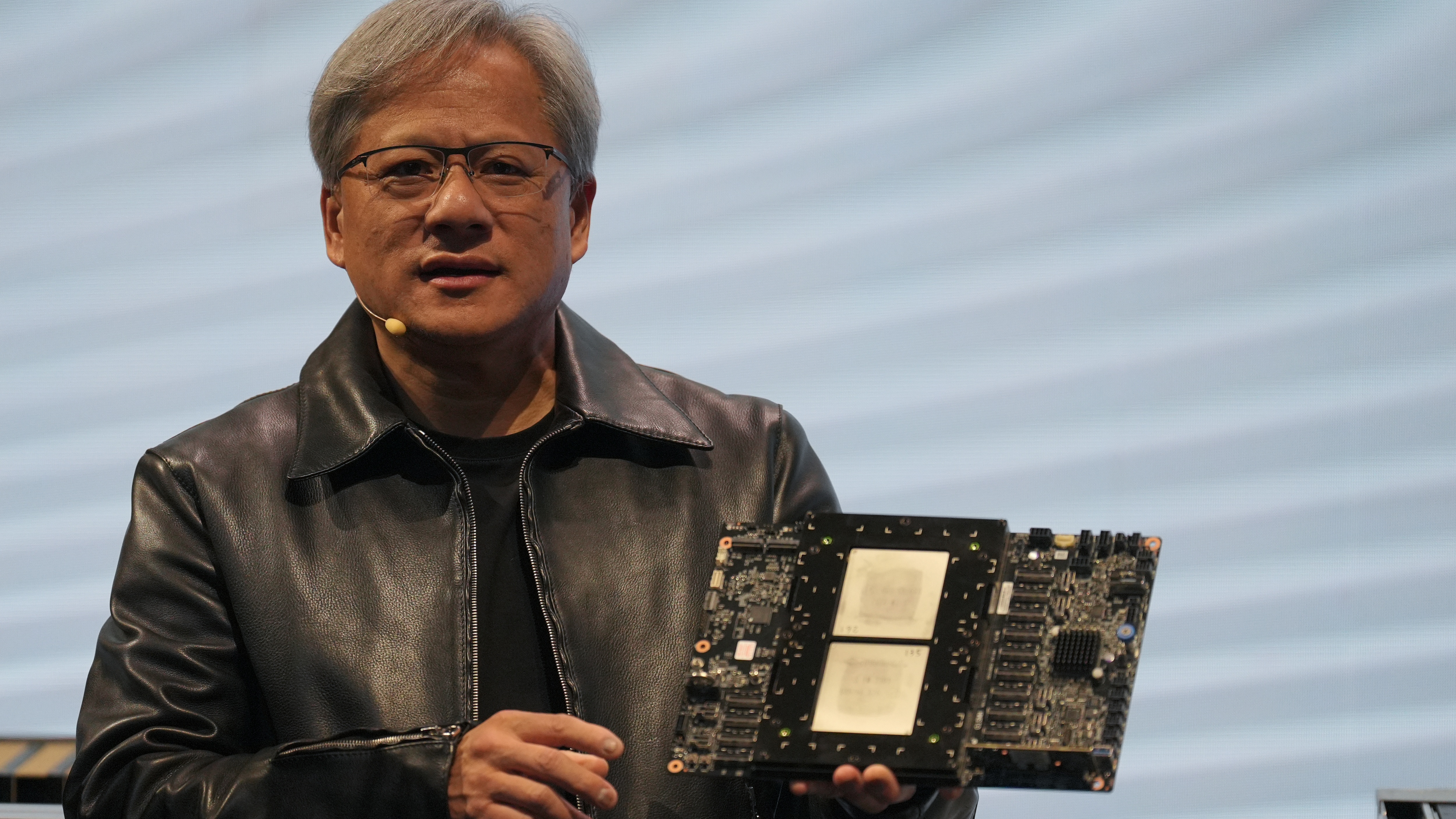

As things currently stand, OpenAI uses large-scale computer systems with Nvidia chips for AI model training and inference.

It's not the only company to do so, of course, which is why Nvidia's data centre division pulled in a total $115.2 billion last year—more than AMD and Intel's entire revenues combined. And it's not just a case that Team Green is shifting vast numbers of its megachips, as they're also very expensive.

Precisely how expensive isn't accurately known, but OpenAI, Meta, and Microsoft have all forked out billions of dollars for Hopper and Blackwell processors. Such expenditure isn't sustainable unless the end users of their AI systems can be leveraged into paying for it all in some way.

A logical alternative is to simply spend less money on AI chips in the first place. The thing is, similar processors from AMD or Intel are just as pricey, hence why the likes of Amazon and Google went down a route of using in-house components.

Both firms have the financial resources to do this directly, but OpenAI doesn't, which is why the claim that Broadcom is doing it all for them is almost certainly true—after all, it already has such products in its portfolio, such as the snappily-titled 3.5D XDSiP.

Even if this all comes to pass, OpenAI is still going to be reliant on Nvidia's GPU for a good while yet. That's because all of its current software stack is written with that hardware in mind, so it will take many moons to shift it across to Broadcom's platform and even more rotations of our solar system to get it working as smoothly as it currently does.

Nvidia stopped being a gaming-first company a long time ago, and until the AI bubble bursts or deflates somewhat, its insatiable appetite for dollars means that Team Green will continue to focus almost all its efforts on machine learning megachips.

OpenAI switching to Broadcom GPUs is certainly news, but it's absolutely not a glimmer of hope for wallet-beaten PC gamers hoping for a sliver of light at the end of the tunnel.

Bengali (Bangladesh) ·

Bengali (Bangladesh) ·  English (United States) ·

English (United States) ·